Table of Contents

Inter-Process Communication in Linux

Interprocess communication (IPC) in Linux using the C language involves several methods, such as pipes, message queues, shared memory, and semaphores. Each of these methods facilitates different use cases and complexities. In this tutorial, I'll give you a basic introduction to each method, highlighting how you can use them in C in you embedded linux system.

Pipes

Pipes are one of the simplest forms of IPC, allowing one-way communication between processes. A pipe can be used by a parent process to communicate with its child process.

Example:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

int main() {

int pipefds[2];

char buffer[30];

// Create a pipe

if (pipe(pipefds) == -1) {

perror("pipe");

exit(EXIT_FAILURE);

}

// Fork a new process

pid_t pid = fork();

// Check if fork was successful

if (pid == -1) {

perror("fork");

exit(EXIT_FAILURE);

}

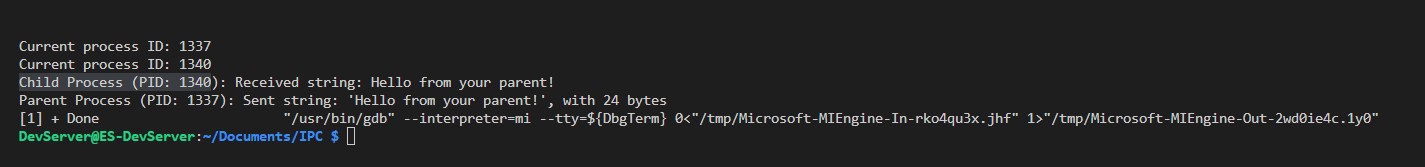

printf("Current process ID: %d\n", getpid());

if (pid == 0) {

// Child process

// Ensure there's no garbage data

memset(buffer, 0, sizeof(buffer));

read(pipefds[0], buffer, sizeof(buffer));

printf("Child Process (PID: %d): Received string: %s\n", getpid(), buffer);

exit(EXIT_SUCCESS);

} else {

// Parent process

const char* msg = "Hello from your parent!";

size_t retValue = write(pipefds[1], msg, strlen(msg) + 1); // +1 for NULL terminator

printf("Parent Process (PID: %d): Sent string: '%s', with %zu bytes\n", getpid(), msg, retValue);

}

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "Main Process" as main

participant "pipe()" as pipe

participant "fork()" as fork

participant "Child Process" as child

participant "read()" as read

participant "Parent Process" as parent

participant "write()" as write

main -> pipe : Create a pipe

alt pipe success

main -> fork : Fork a new process

alt pid == -1

fork -> main : fork failed

main -> main : perror("fork")\nexit(EXIT_FAILURE)

else pid == 0

== Child Process ==

fork -> child : In child process

child -> read : read(pipefds[0], buffer, sizeof(buffer))

read -> child : Buffer filled

child -> child : printf("Child Process (PID: %d): Received string: %s\n", getpid(), buffer)

child -> child : exit(EXIT_SUCCESS)

else

== Parent Process ==

fork -> parent : In parent process

parent -> write : write(pipefds[1], msg, strlen(msg) + 1)

write -> parent : Message sent

parent -> parent : printf("Parent Process (PID: %d): Sent string: '%s', with %zu bytes\n", getpid(), msg, retValue)

end

else

pipe -> main : pipe failed

main -> main : perror("pipe")\nexit(EXIT_FAILURE)

end

@enduml

The key aspect to understand is that, due to the lack of explicit synchronization between the parent and the child processes, the order in which they execute is not predetermined. The operating system's scheduler does not prioritize either the parent or the child process, leading to an indeterminate execution sequence. This unpredictability might raise concerns about how the child process appears to read from the pipe before the parent has written to it. However, this scenario is managed by the blocking nature of pipes, which ensures that if the child attempts to read before the parent writes, it will simply block and wait for the parent to write data, thereby maintaining the correct sequence of operations.

Here are key points to clarify this behavior:

Blocking Behavior of Pipes

-

Pipes have a blocking behavior by default. If a process tries to read from an empty pipe (no data written yet), the read operation will block the process until there is data to read.

-

Similarly, if the write end of the pipe is full (the buffer), and a process tries to write to it, the process will be blocked until there is space in the pipe to write more data.

Scheduling and Execution Order

-

After a call to

fork(), both the parent and the child processes are runnable, and the operating system's scheduler decides which process runs first. This decision can lead to non-deterministic behavior in terms of execution order unless explicitly synchronized. -

In many cases, the parent process might run first and write to the pipe, allowing the child process to read immediately once it gets scheduled. However, it's also possible for the child process to execute first, in which case it would block on the read operation until the parent writes to the pipe.

In this Specific Scenario:

- In the given code, there is no explicit synchronization mechanism (like using

wait(),waitpid(), signaling, or similar) to control the order of execution between the parent and child processes concerning the pipe operations. This means the child process might attempt to read from the pipe before the parent has written to it. - Because of the pipe's blocking nature, if the child attempts to read before the parent writes, it will simply wait (block) until the parent writes data into the pipe. This ensures that even if the child process runs first and tries to read, it won't proceed with the read operation (won't finish the

read()call) until the parent process writes the data to the pipe.

Why pipefds has only two elements!

In Unix-like operating systems, a pipe is a form of inter-process communication (IPC) that allows data to flow from one process to another in a unidirectional flow. The pipe() system call is used to create a pipe, and it provides two file descriptors:

- pipefds[0]: This is the read end of the pipe. Data written to the pipe by one process can be read from this end by another process.

- pipefds[1]: This is the write end of the pipe. Data can be written to this end of the pipe by one process and read from the other end (pipefds[0]) by another process.

The array pipefds has only two elements because that's all that's needed to represent a single pipe: one end for reading and the other end for writing. The operating system handles the connection between these two ends internally, ensuring that data flows from the write end to the read end. This simple arrangement allows for straightforward, unidirectional communication between processes.

When you use pipe(pipefds), the operating system assigns file descriptors to pipefds[0] and pipefds[1] for the read and write ends of the pipe, respectively. These file descriptors can then be used with standard Unix file operation system calls (read(), write(), close(), etc.) to perform IPC. The unidirectional nature of pipes is often complemented by creating two pipes if bidirectional communication is necessary, one for each direction of data flow.

The behavior in this inter-process communication (IPC) exemple with implicit synchronization due to the blocking nature of pipe operations ensures data coherence and, sequentiality where necessary, even if the execution order of the parent and child processes might seem counterintuitive initially.

Message Queues

Message queues in Linux are a part of the POSIX message queues API, which allows processes to communicate asynchronously by sending and receiving messages. A message queue is identified by a name and can be used by multiple processes. Each message in the queue is stored with an associated priority, which can affect the order in which messages are received.

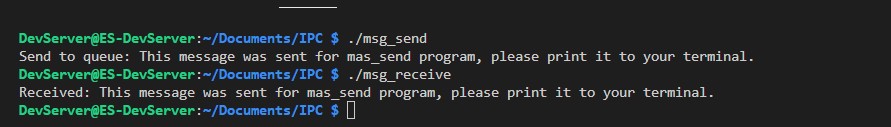

Below is a simple C program example that demonstrates the creation of a message queue, sending a message to the queue, and then receiving that message from the queue. This example consists of two parts:

- Sending a message to the queue (

msg_send.c) - This part of the program creates a message queue and sends a message to it. - Receiving a message from the queue (

msg_receive.c) - This part receives the message sent to the queue and displays it.

Part 1: Sending a Message (msg_send.c)

#include <stdio.h>

#include <stdlib.h>

#include <fcntl.h> /* For O_* constants */

#include <sys/stat.h> /* For mode constants */

#include <mqueue.h>

#define QUEUE_NAME "/test_queue"

#define MAX_SIZE 1024

#define MSG_STOP "exit"

int main() {

mqd_t mq;

char buffer[MAX_SIZE];

// Open a message queue

mq = mq_open(QUEUE_NAME, O_CREAT | O_WRONLY, 0644, NULL);

if (mq == (mqd_t)-1) {

perror("mq_open");

exit(EXIT_FAILURE);

}

// Send a message

printf("Send to queue: ");

fflush(stdout);

fgets(buffer, MAX_SIZE, stdin);

if (mq_send(mq, buffer, MAX_SIZE, 0) == -1) {

perror("mq_send");

exit(EXIT_FAILURE);

}

// Close the message queue

mq_close(mq);

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "msg_send.c" as sender

participant "Message Queue" as queue

sender -> queue : mq_open(QUEUE_NAME, O_CREAT | O_WRONLY)

note right: Open/Create the message queue for sending

sender -> sender : fgets(buffer, MAX_SIZE, stdin)

note right: Read message from user

sender -> queue : mq_send(mq, buffer, MAX_SIZE, 0)

note right: Send message to the queue

sender -> queue : mq_close(mq)

note right: Close the message queue

@enduml

Part 2: Receiving a Message (msg_receive.c)

#include <stdio.h>

#include <stdlib.h>

#include <fcntl.h> /* For O_* constants */

#include <sys/stat.h> /* For mode constants */

#include <mqueue.h>

#define QUEUE_NAME "/test_queue"

int main() {

mqd_t mq;

struct mq_attr attr;

char *buffer;

// Open the message queue

mq = mq_open(QUEUE_NAME, O_RDONLY);

if (mq == (mqd_t)-1) {

perror("mq_open");

exit(EXIT_FAILURE);

}

// Get the attributes of the queue

if (mq_getattr(mq, &attr) == -1) {

perror("mq_getattr");

exit(EXIT_FAILURE);

}

// Allocate buffer for the largest possible message

buffer = malloc(attr.mq_msgsize);

if (buffer == NULL) {

perror("malloc");

exit(EXIT_FAILURE);

}

// Receive a message

if (mq_receive(mq, buffer, attr.mq_msgsize, NULL) == -1) {

perror("mq_receive");

free(buffer);

exit(EXIT_FAILURE);

}

printf("Received: %s", buffer);

// Clean up

free(buffer);

mq_close(mq);

mq_unlink(QUEUE_NAME);

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "Main Process" as main

participant "mq_open()" as mq_open

participant "mq_getattr()" as mq_getattr

participant "malloc()" as malloc

participant "mq_receive()" as mq_receive

participant "printf()" as printf

participant "free()" as free

participant "mq_close()" as mq_close

participant "mq_unlink()" as mq_unlink

main -> mq_open : Open message queue

mq_open -> main : mqd_t mq

main -> mq_getattr : Get attributes of the queue

mq_getattr -> main : struct mq_attr attr

main -> malloc : Allocate buffer based on mq_msgsize

malloc -> main : char* buffer

main -> mq_receive : Receive message

mq_receive -> main : Fill buffer with received message

main -> printf : Print received message

printf -> main :

main -> free : Free allocated buffer

free -> main :

main -> mq_close : Close message queue

mq_close -> main :

main -> mq_unlink : Unlink message queue

mq_unlink -> main :

@enduml

Explanation

- Message Queue Creation and Opening: A message queue is created/opened using

mq_open(). TheO_CREATflag is used to create the queue if it doesn't exist. The name of the queue must start with a/. For sending,O_WRONLYis used, and for receiving,O_RDONLY. - Sending a Message:

mq_send()sends a message to the queue. The message is placed in the queue according to its priority. - Receiving a Message:

mq_receive()receives the oldest of the highest priority messages from the queue. - Closing and Unlinking: After operations, the message queue is closed using

mq_close(). To remove the message queue,mq_unlink()is used. It's typically done by the receiver after ensuring all messages are processed to clean up.

Compilation and Running

To compile these programs, use the -lrt flag to link the real-time library:

gcc msg_send.c -o msg_send -lrt

gcc msg_receive.c -o msg_receive -lrt

Run msg_send to send a message, and msg_receive in another terminal to receive the message.

This example demonstrates basic message queue operations. Error checking is minimal for brevity, but in real applications, thorough error checking is essential.

Shared Memory

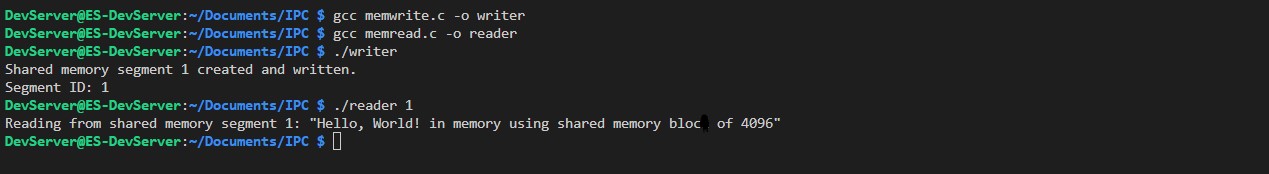

Shared memory is a powerful IPC (Inter-Process Communication) mechanism that allows two or more processes to share a memory segment. This can be very efficient because data does not need to be copied between processes; they access the shared memory area directly. Here's a simple examples in C that demonstrate using shared memory for IPC in Linux. The example consists of two parts: a writer (which creates and writes to the shared memory) and a reader (which reads from the shared memory).

Shared Memory: Writer

This part of the program creates a shared memory segment and writes a message to it.

// writer.c

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/shm.h>

#include <sys/stat.h>

int main() {

int segment_id;

char* shared_memory;

const int size = 4096; // Size of the shared memory segment

const char* message = "Hello, World! in memory using shared memory bloc of 4096";

// Allocate a shared memory segment

segment_id = shmget(IPC_PRIVATE, size, S_IRUSR | S_IWUSR);

if (segment_id == -1) {

perror("shmget");

exit(EXIT_FAILURE);

}

// Attach the shared memory segment

shared_memory = (char*)shmat(segment_id, NULL, 0);

if (shared_memory == (char*)-1) {

perror("shmat");

exit(EXIT_FAILURE);

}

// Write a message to the shared memory segment

sprintf(shared_memory, "%s", message);

printf("Shared memory segment %d created and written.\n", segment_id);

// Print the segment ID (to be used by the reader)

printf("Segment ID: %d\n", segment_id);

// Detach the shared memory segment

if (shmdt(shared_memory) == -1) {

perror("shmdt");

exit(EXIT_FAILURE);

}

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "Main Process (Writer)" as main

participant "shmget()" as shmget

participant "shmat()" as shmat

participant "sprintf()" as sprintf

participant "printf()" as printf

participant "shmdt()" as shmdt

main -> shmget : Allocate shared memory segment

shmget -> main : Returns segment_id

main -> shmat : Attach segment to process

shmat -> main : Returns pointer to shared_memory

main -> sprintf : Write message to shared_memory

main -> printf : Print segment_id

main -> shmdt : Detach the shared memory segment

shmdt -> main :

@enduml

Shared Memory: Reader

This part reads the message from the shared memory segment created by the writer.

// reader.c

#include <stdio.h>

#include <stdlib.h>

#include <sys/shm.h>

int main(int argc, char *argv[]) {

int segment_id;

char* shared_memory;

const int size = 4096; // Size of the shared memory segment

if (argc != 2) {

fprintf(stderr, "Usage: %s <segment_id>\n", argv[0]);

exit(EXIT_FAILURE);

}

segment_id = atoi(argv[1]);

// Attach the shared memory segment

shared_memory = (char*)shmat(segment_id, NULL, 0);

if (shared_memory == (char*)-1) {

perror("shmat");

exit(EXIT_FAILURE);

}

// Read from the shared memory segment

printf("Reading from shared memory segment %d: \"%s\"\n", segment_id, shared_memory);

// Detach the shared memory segment

if (shmdt(shared_memory) == -1) {

perror("shmdt");

exit(EXIT_FAILURE);

}

// Optionally, remove the shared memory segment

// shmctl(segment_id, IPC_RMID, NULL);

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "Main Process (Reader)" as main

participant "shmat()" as shmat

participant "printf()" as printf

participant "shmdt()" as shmdt

main -> shmat : Attach shared memory segment by segment_id

shmat -> main : Returns pointer to shared_memory

main -> printf : Read and print message from shared_memory

main -> shmdt : Detach the shared memory segment

shmdt -> main :

@enduml

How It Works

- The writer program creates a shared memory segment and writes a message to it. It prints the ID of the shared memory segment, which you need to note.

- The reader program needs the shared memory segment ID as an argument to attach to and read from the shared memory.

Compilation

Compile both programs:

gcc memwrite.c -o writer

gcc memeread.c -o reader

Running

First, run the writer to create the shared memory segment and write to it:

./writer

Note the printed segment ID, then run the reader, passing the segment ID as an argument:

./reader <segment_id>

This should display the message written by the writer.

Explanation

shmgetcreates a new shared memory segment or obtains access to an existing one.shmatattaches the shared memory segment identified by the segment ID to the address space of the calling process.shmdtdetaches the shared memory segment.shmctlcan perform various operations on the shared memory segment, such as removing it withIPC_RMID.

Shared memory is a low-level, efficient form of IPC but requires careful synchronization between processes to avoid concurrent access issues. For synchronization, mechanisms like semaphores or POSIX synchronization APIs are commonly used.

Semaphores

Semaphores are synchronization primitives used to manage concurrent access to resources by multiple processes or threads. In Unix-like systems, POSIX semaphores can be used both for inter-process synchronization and for threads within the same process. Here's a simple example in C with explanation that demonstrates using a POSIX semaphore for synchronizing access between two processes.

Overview

This example will consist of two programs:

-

sem_create.c: This program will create a semaphore and initialize it. It's responsible for setting up synchronization.

-

sem_wait_post.c: This program will wait on the semaphore (decrementing it) before accessing a shared resource and then post to the semaphore (incrementing it) after it's done. For simplicity, we'll simulate access to a shared resource with sleep.

Step 1: Create and Initialize a Semaphore (sem_create.c)

#include <stdio.h>

#include <stdlib.h>

#include <semaphore.h>

#include <fcntl.h> /* For O_* constants */

#include <sys/stat.h> /* For mode constants */

#define SEM_NAME "/my_semaphore"

int main() {

sem_t *sem;

// Create and initialize the semaphore

sem = sem_open(SEM_NAME, O_CREAT | O_EXCL, 0644, 1);

if (sem == SEM_FAILED) {

perror("sem_open");

exit(EXIT_FAILURE);

}

printf("Semaphore created and initialized.\n");

// Close the semaphore

sem_close(sem);

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "sem_create Process" as create

participant "sem_open()" as sem_open

participant "printf()" as printf

participant "sem_close()" as sem_close

create -> sem_open : Create and initialize semaphore

sem_open -> create : Semaphore reference

create -> printf : Print confirmation message

create -> sem_close : Close the semaphore

sem_close -> create

@enduml

Step 2: Wait and Post to the Semaphore (sem_wait_post.c)

#include <stdio.h>

#include <stdlib.h>

#include <semaphore.h>

#include <fcntl.h> /* For O_* constants */

#include <unistd.h> /* For sleep() */

#define SEM_NAME "/my_semaphore"

int main() {

sem_t *sem;

// Open the semaphore

sem = sem_open(SEM_NAME, 0);

if (sem == SEM_FAILED) {

perror("sem_open");

exit(EXIT_FAILURE);

}

// Wait on the semaphore

if (sem_wait(sem) < 0) {

perror("sem_wait");

exit(EXIT_FAILURE);

}

printf("Entered the critical section.\n");

sleep(3); // Simulate critical section work

printf("Leaving the critical section.\n");

// Post to the semaphore

if (sem_post(sem) < 0) {

perror("sem_post");

exit(EXIT_FAILURE);

}

// Close the semaphore

sem_close(sem);

return 0;

}

To better understand the strucutre of our c code, i will now use the plantUML diagram. This diagram illustrates the main componentes of the C code.

@startuml

!theme toy

participant "sem_wait_post Process" as wait_post

participant "sem_open()" as sem_open

participant "sem_wait()" as sem_wait

participant "printf()" as printf

participant "sleep()" as sleep

participant "sem_post()" as sem_post

participant "sem_close()" as sem_close

wait_post -> sem_open : Open the semaphore

sem_open -> wait_post : Semaphore reference

wait_post -> sem_wait : Wait (decrement) semaphore

sem_wait -> wait_post

wait_post -> printf : Print "Entered the critical section"

wait_post -> sleep : Simulate work in critical section

sleep -> wait_post

wait_post -> printf : Print "Leaving the critical section"

wait_post -> sem_post : Post (increment) semaphore

sem_post -> wait_post

wait_post -> sem_close : Close the semaphore

sem_close -> wait_post

@enduml

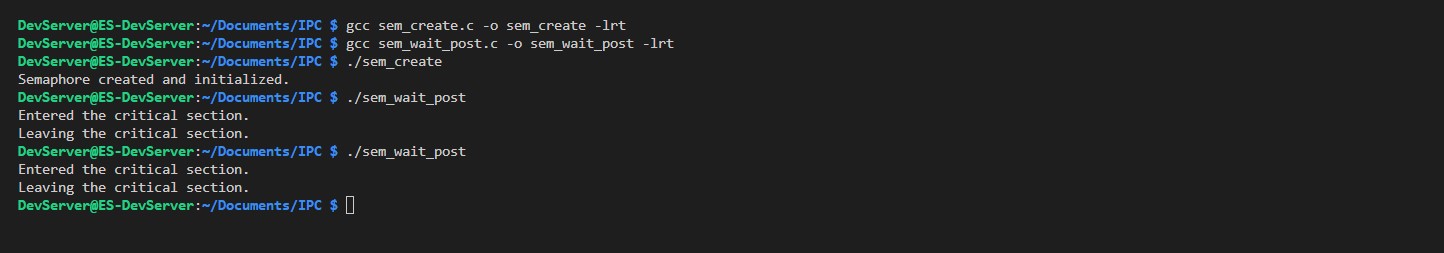

Compilation and Execution

Compile both programs:

gcc sem_create.c -o sem_create -lrt

gcc sem_wait_post.c -o sem_wait_post -lrt

Run sem_create first to create and initialize the semaphore:

./sem_create

Then, run sem_wait_post in multiple terminals or multiple times:

./sem_wait_post

Explanation

- Semaphore Creation (

sem_create.c): This program creates a named semaphore usingsem_openwith theO_CREATandO_EXCLflags. The semaphore is initialized to 1, indicating that one resource is available or that one process can enter a critical section. - Waiting and Posting (

sem_wait_post.c): This program demonstrates waiting for the semaphore (entering the critical section) and posting to the semaphore (leaving the critical section).sem_waitdecrements the semaphore—if it's zero, the process blocks until the semaphore is greater than zero.sem_postincrements the semaphore, potentially unblocking a waiting process. - Critical Section: The critical section is simulated with a call to

sleep(), representing work that requires synchronized access.

Cleaning Up

After you're done with the semaphore, you should remove it to clean up system resources. This can be done by calling sem_unlink(SEM_NAME); in either program after you're sure it's no longer needed by any process.

This simple example shows how semaphores can be used to synchronize access to shared resources or critical sections between different processes, preventing race conditions and ensuring that only one process accesses the critical section at a time.

Conclusion

These examples give you a starting point for using IPC mechanisms in Linux with C. Each IPC mechanism has its own use cases and peculiarities, so it's worth exploring them further as per your requirements.

🏷️ Author position : Embedded Software Engineer

🔗 Author LinkedIn : LinkedIn profile

Comments